Computer Architecture Computer Science

Lesson 1: Bits and Bytes

In this computer science video tutorial, we introduced some of the basic notions that we will be using to explain the inner workings of a computer. The focus of this series is on how computers function internally with a special emphasis on how architecture affects the performance of computer programs.

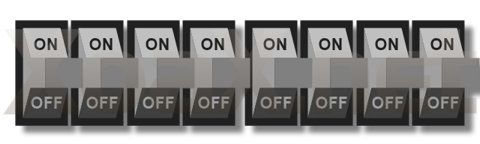

This lesson introduced the bit, which is the smallest unit of data in a computer. A bit can have one of two values: zero or one. The word bit is the shortened form of the words binary digit. Internally, the computer store bit in small switches much light a simple light switch. The state of the switch is designated as one for on and zero for off.

On a larger scale, these switches are stored in a series of eight, which encoded eight bits or a byte of data. A byte or eight bits, is the smallest amount of memory that is directly addressable in most computers. That is, a single memory location in a typical computer stores 8 bits.

These 8 bit bytes correspond to binary number, which translates to a value from 0 to 255 in decimal. Decimal numbers are what we usually use for arithmetic. In decimal, each number corresponds to a multiple of a power of ten: The ones digit is multiplied by ten to the zero or one; the tens digit is multiplied by ten to the one or ten; the hundreds digit is multiplied by ten to the two or one hundred, and so on. So, 615 is 6 times ten to the two, plus 1 times ten to the one, plus 5 times ten to the zero.

In binary, digits can only be 0 or 1 instead of 0 through 9 as we have in decimal. As such the digits are multiplied by powers of two instead of powers of ten. In binary, the number 1101 is one times two to the three, plus one times two to the two, plus zero times two to the one, plus one times two to the zero. In decimal, we write that as

1*2^3 + 1*2^2 + 0*2^1 + 1*2^0 = 1*8 + 1*4 + 0*2 + 1*1 = 8 + 4 + 1 = 13

Using eight digits to represent the 256 values in a byte is not economical, so a number system was developed to represent a byte with only two digits. We start by splitting a byte into two sets of four bits or what are called nibbles. Each nibble has 16 different configurations or values as a binary number:

0000 0001 0010 0011 0100 0101 0110 0111

1000 1001 1010 1011 1100 1101 1110 1111

These 16 configurations can be put into correspondence with the hexadecimal digits 0 through F:

0 1 2 3 4 5 6 7 8 9 A B C D E F

Note that hexadecimal numbers are the same as decimal numbers up to 9. Then they use the letters A through F to represent 10 through 15 respectively. With this system, a byte like 10100111 can be written with two hexadecimal digits as A7.

With 16 possibilities for each digit, hexadecimal is a base 16 number system. That is, the digits are multiplied by powers of 16. So, in hexadecimal A8D2 is equal to 10*16^3 + 8*16^2 + 13*16^1 + 2*16^0 = 10*4096 + 8*256 + 13*16+ 2*1 = 40960 + 2048 + 208 + 2 = 43218 in decimal. Since a number like this 34 could have different values depending on whether it is written in decimal or hexadecimal, we prepend 0x like this 0x34 to indicate that the number is in hexadecimal rather than decimal. That way we know that the value is fifty-two, rather than thirty-four.

© 2007–2024 XoaX.net LLC. All rights reserved.